Memory/Integrated_Cache

Block Name: Integrated_Cache

Code File Location: VisualSim/actor/arch/Memory/Integrated_Cache

Table of contents

- Block Description

- Prefetching

- Associativity

- Operation

- Cache Operation flows

- Expected fields

- List of model

- Parameter configuration

- How to connect

- Enabling plots

- Statistics

- Error messages and solution

Block Overview

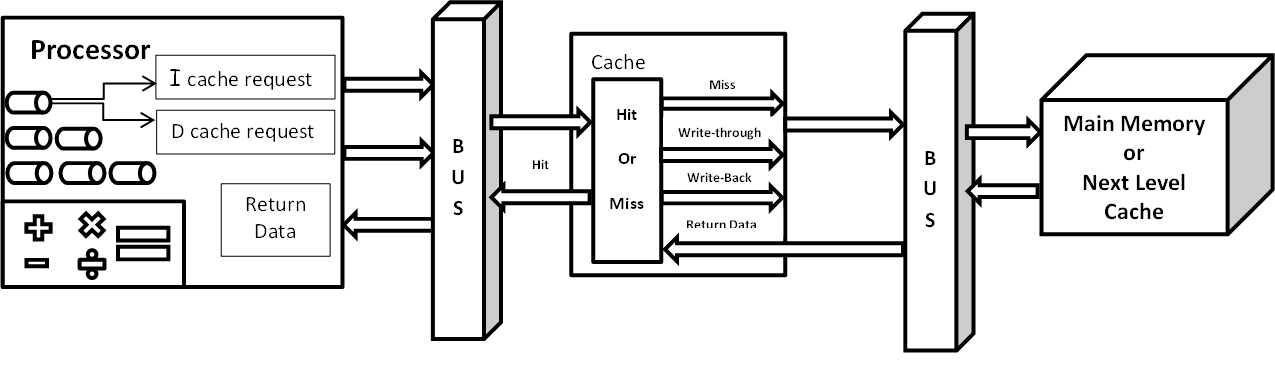

This block can be used as L1 (Instruction or Data), L2 and L3 cache. Cache block have two modes of operation, Stochastic cache model and Address based cache model. It can receive inputs from the Processor or any other device through the bus. The block will perform its operation based on the command specified on the requesting data structure. The hit and miss operations of this block will be depends on the cache type(stochastic or Address based).

Description

Cache block receives input from the processor or IO_device or Lower level caches and check the block for hit or miss. in the case of hit the data will be send back to source(Read_Req) or write it in the cache(Write_Req). In the case of a miss the cache will send a request for specific block with corresponding starting address to higher level cache or secondary memory, whichever connected to it.For the Address based(Cycle Accurate) model the requesting address in "A_Addresss" field will be used for determining hit or miss. Initally the cache will be empty. The first request will be a miss and the request will be buffered in the cache. Also if there are any request reaching the cache within the same address range of the miss request, then those blocks will be buffered until the cache reveives the data from the higher level memory.

Input flow control for the cache block is achieved by adding the field"A_Event_Name" in the input Data Structure. The output flow control for the cache block is activated by the "Output_Flow_Control" parameter in the configuration window.

Cache block can be used for both dedicated sources(Specified in the Block_Configuration parameter) and non-dedicated sources. Dedicated sources will have a separate memory space, which other sources cannot access. The non-dedicated sources can use cache by specifying the source name as 'Common' in the block configuration parameter, cache will allow these sources to access the common space that any devices can access.

Stochastic Model:

Cache block uses Instruction hit ratio and data hit ratio for determining the hit or miss to the requested input.

Address Based Model:

Cache block uses A_I_Addr and A_D_Addr from the input data structure for checking the specific address is available or not in the cache block.

Write Policies:

Cache block have Write Through and Write Back policy options.

In the case of Write Through the data being written in the current cache block will be updated to higher level cache or memory at the same time.

In the case of Write Back the data will be written to the cache block and it will not be sent to higher level memory until a read request or replacement of the corresponding block occurs.

Replacement policy:

Cache block have Least Recently Used(LRU) and Pseudo-LRU options.

In the case of LRU the least requested block of memory will be replaced by the new block.

In the case of Psuedo-LRU Blocks which accumulated at the beginning stages will be replaced.

In the case of assciativity the block will be choosed on the above options within the cache sets.

Prefetch Mechanism

Cache block by default use sequential prefetching. Initally the cahce will prefetch 1 block of memory from starting address(configured in Main_memory_Start_Addr). When a new block is getting accessed, the cache will initiate a prefetch for next block. If any request to next block, arrives during that time will be buffered.Whenever the the first request for an address in the specific block is performed, the prefetch for the next sequential block of address will be triggered and loaded in the cache.

Associativity

Cache block can be configured with associativity by setting the parameter N_Way_Associativity as greater than 1 (eg: 2,4,8,16). User have to configure the Size and Starting address of main memory or the next level memory to acheive the associativity. Specific set of address for each ways will be predefined based on the size (both cache and main memory) and the N_Way_Associativity. if there is an overflow or the new address request of the allocated ways then the correspomding set of space(already allocated way of same address set) will be wrie back to main memory and the new request will be allocated to that way.Required Fields: Example_Value

A_Source "Processor_1"

A_Destination "I_1_Cache"

A_D_Addr 32L

A_I_Addr 0L

A_Command "Read_Intr"

A_Bytes 32

A_Task_Flag true

Use Cases:

Instruction Cache

- A_Command field should be "Read_Instr" for reading the instruction in the cache.

- No_of_D_Blocks in Block configuration parameter can be {0,0} in the case, the whole cache block is used for instruction alone.

- Memory space for instructions are allocated to the dedicated sources specified in the block configuration.

Data Cache:

- A_Command field can be "Read_Req" or "Write_Req" for reading and writing data in the cache.

- No_of_I_Blocks in Block configuration parameter can be {0,0} in the case, the whole cache block is used for Data alone.

- Memory space for Data are allocated to the dedicated sources specified in the block configuration.

L2 Cache:

- Block Configuration parameter must be fulfilled with dedicated source and the number of blocks for I - Cache and D - Cache.

- memory space for both data and cache for the dedicated sources are allocated based on the block configuration.

L3 Cache:

- Whole Cache block can be used as data cache to read or write the block of data.

Cache block can support the following instructions

- Read_Instr (Instruction Cache)

- Read_Req (Data Cache)

- Write_Req (Data Cache)

- Read (Consider the request as data access)

- Write (Consider the request as data write)

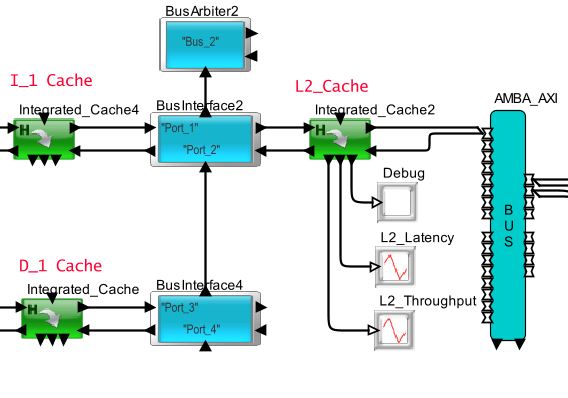

To illustrate the usage, look the following Example in BDE.

Cache_Demo

Operation

Stochastic:

The following parameter plays

important role in stochastic model.

1. Data_Hit_Ratio

2. Instruction_Hit_Ratio

3. Loop_Ratio

The cache hit and miss are decided

based on the above three parameter, which are not used in Address based model.

Input request will be processed in

the cache as follows:

1. Determine hit or miss.

2. If a hit sends out the response to

the source.

3. If a miss keep the input request in

the queue and sends out a request for block of memory (Block_Size_KB).

4. Next level memory will return the

content and the request waiting in the queue will be processed and returns the

data to source.

The incoming request can be a hit or

miss but it cannot be accurate. User can use this mode of operation in simple

architecture.

Address_Based:

The following input fields are

necessary for address based model.

1. A_Address

2. A_I_Addr and A_D_Addr specifically

for Processor request.

3. Block_No

The incoming requests are processed

as follows:

1. Address value will be used for

determining the hit or miss.

2. First request to the cache block will

be a miss (Pre-fetch is not implemented). So a block of request will be sent to

next level memory and the request will be kept in the queue.

3. During this cache miss if some other

request comes for same block (within the address range of the requested block)

then those will also be buffered in the queue.

4. Once the data returned from higher

level memory, the requests that are waiting for the returned block will be

processed and sent out to source.

5. If the requesting address is not

available in the cache or the cache is full and the new set of address range is requested then the cache

will replace the block based on the algorithm chosen on replacement policy .

The above flows are common for Read

request.

Write Policies:

Write_Back:

Incoming

request will be updated to the current cache, but it will not be updated to

next level memory.

That

content will be updated on the following scenarios only:

1. Read request for the address that was

written previously. In this case the current content will be updated to next

level memory and the response will be sent out to source.

2. Cache overflow, when the cache needs to perform a

replacement. The current set of block will be written to next level memory and

the replacement will be initiated.

Write_Through:

Incoming write request will be updated in the current cache and the content will be sent out for next level memory for update.

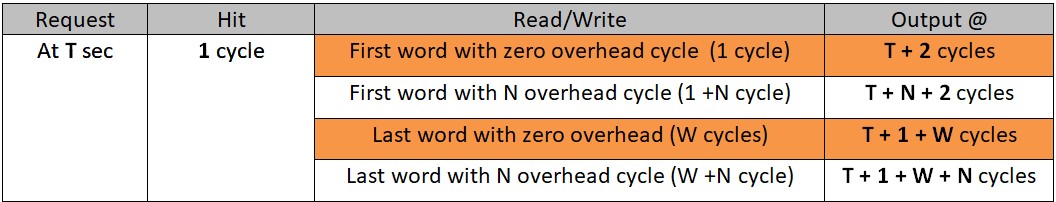

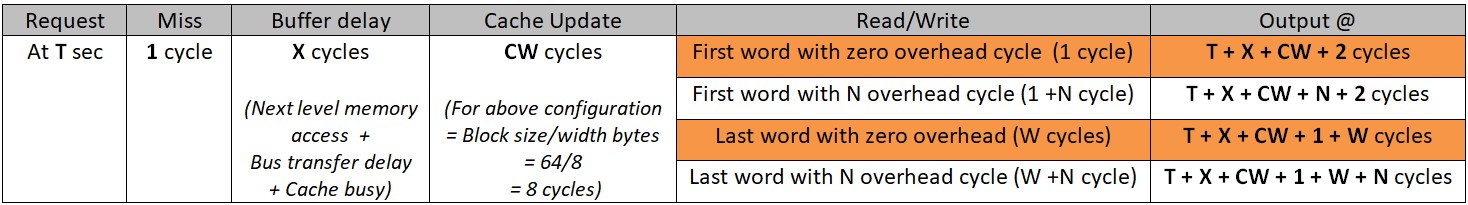

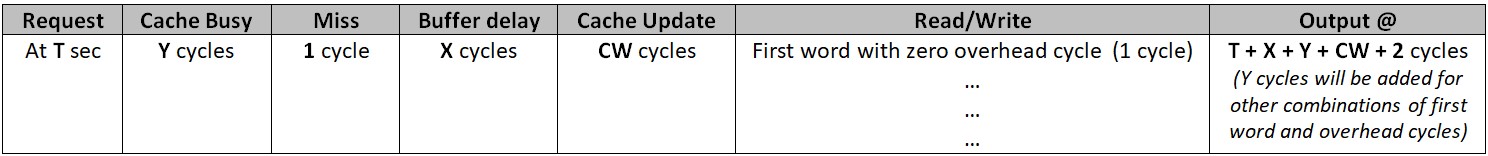

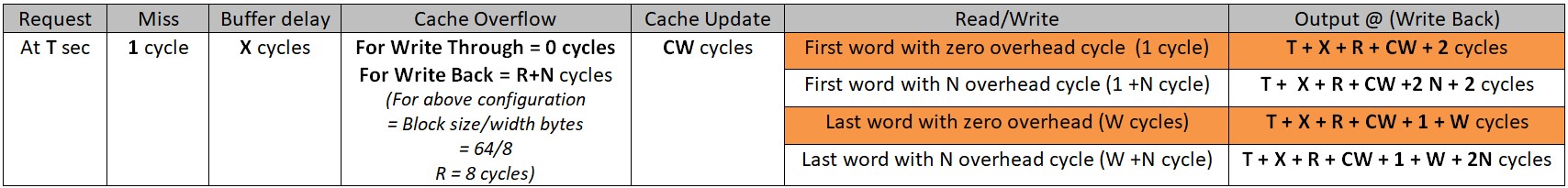

Cache Flows

Cache Speed = 1000.0Mhz (1ns)Block Size = 64 Bytes

Width Bytes = 8 Bytes

Overhead cycles = N cycles

Request Size = 32 Bytes

Whole Word access = W cycles (32/8 = 4 cycles)

Cache is Free:

for T = 0.0 sec and N = 1 cycle (with above configuration)

case 1: 2ns

case 2: 3ns

case 3: 5ns

case 4: 6ns

for T = 0.0 sec and N = 1 cycle (with above configuration)

case 1: 10ns + X cycles

case 2: 11ns + X cycles

case 3: 13ns + X cycles

case 4: 14ns + X cycles

Click here to check "Buffer delay" observation

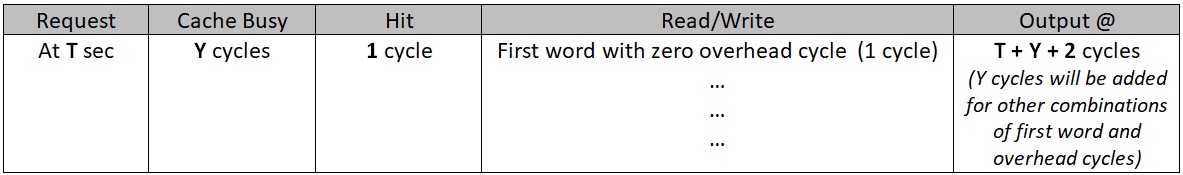

Cache is Busy:

for T = 0.0 sec and N = 1 cycle (with above configuration)

case 1: 2ns + Y cycles

case 2: 3ns + Y cycles

case 3: 5ns + Y cycles

case 4: 6ns + Y cycles

Click here to check the "Cache busy" delay observation at input request

for T = 0.0 sec and N = 1 cycle (with above configuration)

case 1: 10ns + X cycles + Y cycles

case 2: 11ns + X cycles + Y cycles

case 3: 13ns + X cycles + Y cycles

case 4: 14ns + X cycles + Y cycles

Click here to check the "Cache busy" delay observation at input request

Click here to check "Buffer delay" observation

Cache Overflows:

for T = 0.0 sec and N = 1 cycle (with above configuration)

case 1: 18ns + X cycles

case 2: 20ns + X cycles

case 3: 21ns + X cycles

case 4: 23ns + X cycles

If the request is buffered (Cache busy) due to a cache activity then additional Y cycles will be added.

For buffer delay and cache busy delay please check the next section.

Buffer Delay observation

Please use the "Trace Array" and "Time Array" fields.example implementation in Expression list:

Trace = input.Trace_Array

Time_Trace = input.Time_Array

Id = (Trace).search(Cache_Name+"_Miss")

T1 = Time_Trace(Id)

Id = (Trace).search(Cache_Name+"_Miss_Process")

T2 = Time_Trace(Id)

Delta = T2 - T1 /*Buffer delay including miss and cache busy*/

Cache Busy delay observation

Please use the "Trace Array" and "Time Array" fields.example implementation in Expression list:

Trace = input.Trace_Array

Time_Trace = input.Time_Array

Id = (Trace).search(Cache_Name+"_input")

T1 = Time_Trace(Id)

Id = (Trace).search(Cache_Name+"_input_process")

T2 = Time_Trace(Id)

Delta = T2 - T1 /*Buffer delay including miss and cache busy*/

Expected Data Structure

|

Data Structure Field |

Value (Data Type) |

Explanation |

|

A_Bytes

(necessary) |

100 |

This is the total bytes to be transfered for this transaction. All bursts of this transaction will have this value. |

|

A_Bytes_Remaining |

96 |

The number of bytes remaining after the current transaction. |

|

A_Bytes_Sent |

4 |

The number of bytes in this transaction. |

|

A_Command (necessary) |

"Read" or "Write" |

This determines the operation. |

|

A_Address (necessary) |

100L |

This will be used for determining hit or miss in the address based model. |

|

A_Source

(necessary) |

"Processor" |

This is unique name of the Source. When the transaction returns from the Destination, the Source and Destination names are flipped. So, the Source becomes the Destination and Destination becomes Source. |

|

A_Destination |

“L2_Cache” |

Final Destination |

|

A_Task_Flag | true |

Determines whether a respone for the write operation is needed or not. if true then the response will sent back to source. |

Input request combination

|

Description |

A_Command |

A_Bytes |

A_Bytes_Remaining |

A_Bytes_Sent |

|

100 Byte Read at Slave. Bus Width = 4 |

Read |

100 |

96 |

4 |

|

100 Byte Read Return at Master |

Write |

100 |

0 |

100 |

|

100 Byte Write at Slave |

Write |

100 |

0 |

100 |

List of models

VS_AR\demo\memory- Cache_and_mem.xml

- Cache_Demo.xml

- Proc_Cache_MC.XML

- 4x_proc_Private_L2.xml

- 4x_proc_common_L2.xml

Parameter Configuration

| Parameter |

Explanation | Example |

| Cache_Name | This will define the name of the cache block. User has to enter the unique name to avoid overlap with other blocks. | "Cache_1" |

| Cache_Speed_Mhz | Speed of the cache in Mhz. It determines the clock cycle and internal

timing of the Cache block. User can analyze the output time using this speed

value. |

500.0 |

| Cache_Width_Bytes |

This is the maximum width (in terms of byte) that this cache block can process in a single clock cycle. User can use this parameter for analyzing the output and its internal operation. | 4 |

| Cache_Size_Bytes |

The overall cache memory size is determined by this

parameter. |

16 |

| Block_Size_Bytes |

Block size will help the cache to subdivide the memory into set of blocks. Cache will request for the miss based on this block size. Eg: if cache size is 32 KB and the block size is 2KB then the memory will be organized as 16 blocks. These blocks can be assigned to specific Source/Processor based on the “Block_Configuration” parameter. | 1 |

| Hit Ratio |

This will be used in stochastic mode of operation. Cache will use this information to determine

the hit or miss of the input Data request. This will not be used in Address

mode (Cycle Accurate) of operation. |

0.8 |

| Loop_Ratio |

This will be used in stochastic mode for emulate the looping

in instruction fetch. This will not be used in Address mode (Cycle Accurate) of

operation. |

0.2 |

| Overhead_Cycles |

User can include the overhead cycle for each request based on the requirement. | 1 |

| Cache_Replacement_Policies |

Cache blocks will be replaced based on these algorithms. | Pseudo-LRU /*Pseudo-LRU,Least_Recently_Used,Random*/ |

| Cache_Write_Policy |

User can choose the

Write policy for this cache block for the entire simulation. The operation of

these policies is explained in this document. |

Write_Through |

| Stochastic_or_Address_Based | This will determine the

mode of operation of this cache block, user can choose Address based for cycle

accurate mode of operation. To emulate the cache operation with less accurate

user can use the stochastic mode.

|

Address_Based /*Stochastic,Address_Based*/ |

| Miss_Memory_Name |

User has to enter the

next level memory in this field. This will help this cache block to send the

request in case of a miss. If the name is wrong or there is no next level memory with this name then the buses will throw an error as “Destination not found”. |

"L2_Cache" |

| Power_Manager_Name |

This parameter helps the user to observe the power

consumption of this block along with the other blocks in the architecture. User

has to enter the power table name in this field to get the power analysis. |

"none" |

| First_Word | This will determine the output transaction sequence. If this

is true then the first word(Cache width length) of the requested bytes will be

sent out. If not the last word (requested byte length) will be sent out.. |

false |

| No_of_Statistics |

User can analyze the operation in the cache by observing the

Statistics of the cache. It defines the number of samples of statistics for

this cache block. User can view statistics by connecting a text display to

stats port at bottom of the block. |

2 |

| FIFO_Buffers |

Currently not used. |

16 |

| Architecture_Name | This defines the architecture setup name. This will help the

tool to observe the model and make sure the model built is correct. Please keep

the name same as the Architecture setup block. | "Architecture_1" |

| Enable_Hello_Messages |

This will help the tool to find the source and destination of the request. By enabling this blocks can perform the routing in as better way. | true |

| Output_Flow_Control |

User can implement Flow control at

the slave side by enabling this parameter. |

false |

| Input_Flow_Control | Input flow control will be enabled with this parameter Master device should maintain the field “Event_Name” . otherwise the block will throw an error. |

false |

|

N_Way_Associativity |

Determines the Associativity usage and the number of ways in the associativity. |

1 |

|

Main_Memory_Size_KB |

Defines the size of main memory in KB |

2048 |

|

Main_Mem_Start_Addr |

Defines the starting address of the main memory |

8192 |

| Fill_Buffer_Size |

Intermediate buffer between L1 and L2 cache. This holds the Maximum outstanding request to L2. |

10 |

| Cache_Type |

Defines the Type of cache |

"I-Cache" /* "I-Cache", "D-Cache"*/ |

| VIPT_Mode |

Enables VIPT addressing |

false |

| Port | Explanation |

| to_cache | This is the West Side input port. This block can be connected to two buses, one on either side. This is connected to the processor. |

| fm_cache | This is the West Side output port. This block can be connected to two buses, one on either side. This is connected to the processor. |

| stats | Debug messages and statistics are output on this port. |

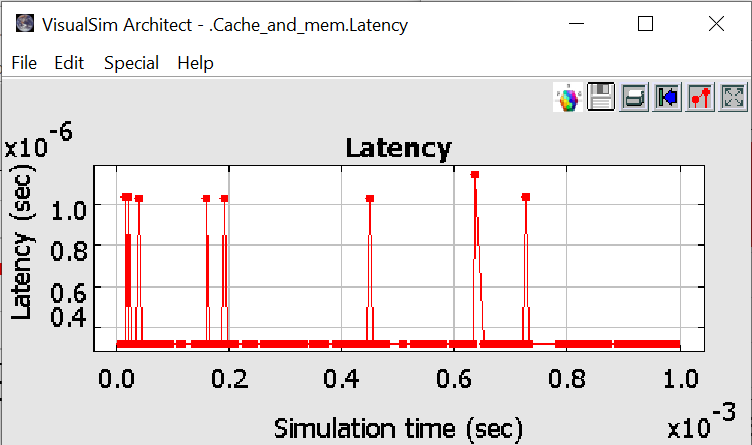

| Throughput |

Throughput of the block during the simulation period. Time data plotter is used to view the plot. |

| Latency |

Latancies of the block for each input. Time data plotter is used to view the plot |

| to_next_cache | This is the East Side output port. This block can be connected to two buses, one on either side. This is connected to the lower level memory. |

| fm_next_cache |

This is the East Side input port. This block can be connected to two buses, one on either side. This is connected to the lower level memory. |

| VIPT_Addr_Check |

This is the North Side input port. It connects the TLB and Cache in VIPT mode. |

How to connect

The

below image shows the basic implementation of the cache block. The

bottom ports are dedicated for Statistics ,latency and throughput.

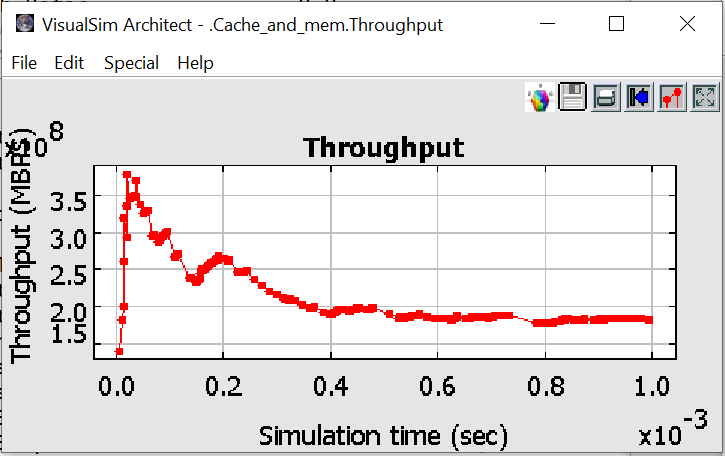

Enabling plots

To

observe the latency and throughput of the cache block just connect the

time data plotter to the bottom ports Overall throughput and latency.

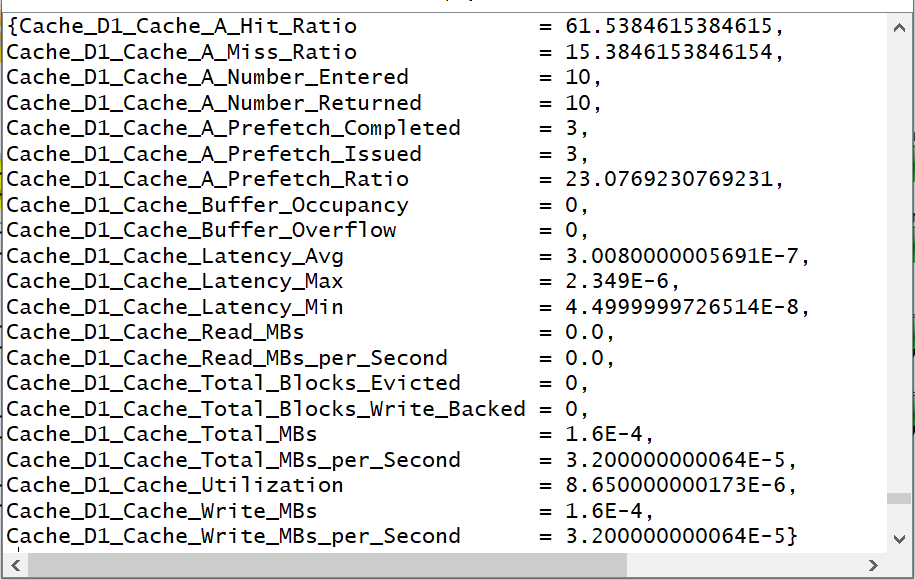

Statistics and Analysis

The Cache block generates the following statistics to analyse the internal charectiristics.

1. Hit_Ratio:

2. Miss_Ratio:

3. Prefetch_Ratio:

Percentage of hit, miss and prefetch ratio of this cache block during the simulation period.

4. Read_MBs:

5. Write_MBs:

6. Total_MBS:

Throughput of the cache block for Read, Write and for the overall block(Read and Write).

7. Read_MBs_per_Second:

8. Write_MBs_per_Second:

9. Total_MBs_per_Second:

Throughput interms of Read, Write and (Read+Write) per second.

10. Buffer_Occupancy:

Request that are waiting in the buffer that are not processed yet at

the sample time.

11. Utilization:

The utilzation of this block in the model in terms of percentage. This is defined for the Simulation period.

12. Number_Entered:

13. Number_Returned:

The total number of request that are entered

and returned by the cache block during the simulation period.

15. Prefetch_Completed:

The total number of Prefetch issed and completed during the simulation time.

16. Req_Waiting_For_Flow_Control:

Multi master architecture with input flow control enabled.

when there is a input request overflow, cach hold the request by blocking the flow.

This field shows the number of requst/masters being blocked due to cache buffer full.

17. Masters_Waiting_For_Flow_Control:

This fields show the master's name whose corresponding flow is blocked.

The following image shows the statistics that can be generated for the cache block.

Cache block appends following set of fields for analysing the output:

- Cache_Accessed - List out the cache blocks that

are accessed by that request. It shows at which level the data is

obtained and the list of caches that are missed.

- Buffer_Delay

- Buffer delay in each cache levels, includes waiting and cache loading.

- Access_Time

- Exact time for data access in each cache levels.

- No_of_Cycles_in_Cache - Corresponding number of cycles in each cache levels.

example:

1. Cache_Accessed

= {"L1_Cache", "L2_Cache",

"L3_Cache"}

Buffer_Delay

=

{1.5952E-8, 1.131E-8, 0.0}

Access_Time

= {3.5714285714286E-10, 3.5714285714286E-10,

4.7619047619048E-10}

No_of_Cycles_in_Cache

= {1, 1, 1}

Error messages and solutions

1.

VisualSim.kernel.util.IllegalActionException: in .IC_Test.manager

Because:

java.lang.NullPointerException

Please make sure Architecture setup block is available in the model

Please make sure the necessary fields are available at the input. if not please add them.

2.

Error_Number : Script_021

Explanation : GTO

(port_token.A_Destination)*, Check argument types, argument values,

field names, and variables.

Exception :

VisualSim.kernel.util.IllegalActionException: No method found matching

{BLOCK = string, DELTA = double, DS_NAME = string, ID = int, INDEX =

int, TIME = double}.A_Destination()

Please make sure the necessary fields are available at the input. if not please add them.

3.

Error_Number : Script_075B

Explanation : hit_t = QUEUE("Q1",pop),

getting QUEUE POP Check argument types, argument values, field names,

and variables.

Exception : Check QUEUE name: Q1

4.

Error : Issue with RegEx execution

Exception :

VisualSim.kernel.util.IllegalActionException: Error invoking function

public static java.lang.String

VisualSim.data.expr.UtilityFunctions.throwMyException(java.lang.String)

throws VisualSim.kernel.util.IllegalActionException

Because:

User RegEx Exception:

AXI_Top_Master_1 did not find Slave named DRAM

on port number: 1 from Source: L2_Cache

Check Device_Attached_to_Slave_N parmeters