Back in 2017, Apple introduced the A11 which included their first dedicated neural network hardware that Apple calls a “Neural Engine.” At the time, Apple’s neural network hardware was able to perform up to 600 billion operations per second and used for Face ID, Animoji and other machine learning tasks. The neural engine allows Apple to implement neural network and machine learning in a more energy-efficient manner than using either the main CPU or the GPU. Today Apple’s Neural Engine has advanced to their new M1 processor that delivers 15X faster machine learning performance of the Neural Engine, according to Apple.

Apple revealed back in Q4 that the “M1 features their latest Neural Engine. Its 16‑core design is capable of executing a massive 11 trillion operations per second. In fact, with a powerful 8‑core GPU, machine learning accelerators and the Neural Engine, the entire M1 chip is designed to excel at machine learning.” There’s an excellent chance that today’s patent covers technology built into the M1 processor to help it achieve its breakthrough performance. While the patent was published today, it was filed in Q4 2019 before the M1 surfaced.

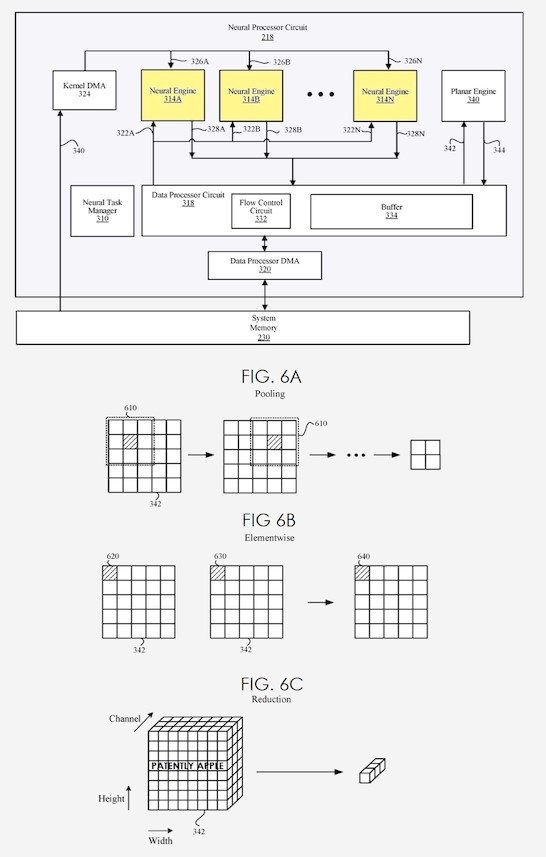

Today, the U.S. Patent Office published a patent application from Apple titled “Multi-Mode Planar Engine for Neural Processor.” Apple’s invention relates to a circuit for performing operations related to neural networks, and more specifically to a neural processor that include a plurality of neural engine circuits and one or more multi-mode planar engine circuits.

An artificial neural network (ANN) is a computing system or model that uses a collection of connected nodes to process input data. The ANN is typically organized into layers where different layers perform different types of transformation on their input. Extensions or variants of ANN such as convolution neural network (CNN), recurrent neural networks (RNN) and deep belief networks (DBN) have come to receive much attention. These computing systems or models often involve extensive computing operations including multiplication and accumulation. For example, CNN is a class of machine learning technique that primarily uses convolution between input data and kernel data, which can be decomposed into multiplication and accumulation operations.

Depending on the types of input data and operations to be performed, these machine learning systems or models can be configured differently. Such varying configuration would include, for example, pre-processing operations, the number of channels in input data, kernel data to be used, non-linear function to be applied to convolution result, and applying of various post-processing operations. Using a central processing unit (CPU) and its main memory to instantiate and execute machine learning systems or models of various configuration is relatively easy because such systems or models can be instantiated with mere updates to code. However, relying solely on the CPU for various operations of these machine learning systems or models would consume significant bandwidth of a central processing unit (CPU) as well as increase the overall power consumption.

Apple’s invention specifically relates to a neural processor that includes a plurality of neural engine circuits and a planar engine circuit operable in multiple modes and coupled to the plurality of neural engine circuits.

At least one of the neural engine circuits performs a convolution operation of first input data with one or more kernels to generate a first output. The planar engine circuit generates a second output from a second input data that corresponds to the first output or corresponds to a version of input data of the neural processor.

The input data of the neural processor may be data received from a source external to the neural processor, or outputs of the neural engine circuits or planar engine circuit in a previous cycle. In a pooling mode, the planar engine circuit reduces the spatial size of a version of second input data. In an elementwise mode, the planar engine circuit performs an elementwise operation on the second input data. In a reduction mode, the planar engine circuit reduces the rank of a tensor.

The emphasis on machine learning, or artificial intelligence (AI), is another digital trend. The block diagram in the patent article shows how each neural processor/engine fits into the architecture:

Fig. 6C highlights the 3D chip connectivity for each neural processor/engine. The multi-mode feature of each neural processor/engine means that each node can be configured for a type of AI processing. The performance in the latest M1 chip, 16 core neural engine is approximately 11 trillion operations per second; or approximately 650 billion operations per second per neural core; expect improvements in next generation with more cores, performance per core, or both. Note: ANN, CNN, RNN, DBN neural processor/engine modes of operation. These neural processor/engines interface to a common AI planar set of blocks that ultimately interface to memory; expect memory addresses to be at the byte level.

Web Reference: https://www.patentlyapple.com/patently-apple/2021/04/apple-reveals-a-multi-mode-planar-engine-for-a-neural-processor-that-could-be-used-in-a-series-screa.html